The Online Hate Speech Interventions Map

By Charlotte Freihse & Vladimir Bojarskich

Interventions against hate speech emerge almost daily. Measures range from policies to tech-innovations, to counter speech projects. Given the diverse approaches to counter hate speech online, we developed a hate speech interventions map to highlight the distinct approaches of different kinds of interventions. Furthermore, we suggest that a research- practice collaboration can be beneficial to both sides.

Why we need interventions

Concurrently, about four in ten people come across a hateful or harassing comment on social media (Geschke et al., 2019; Pew Research Center, 2021). Adolescents and young adults, in particular, are at risk to experience and be victims of online hate (Pew Research Center, 2021; Reichelmann et al., 2021). This has consequences for individual lives as well as our social fabric. Individuals targeted by hate speech can develop symptoms of PTSD and depression (Wypych & Bilewicz, 2022). On a societal level, online hate impedes the benefits that social media brought to democracy (Lorenz-Spreen et al., 2023): outrageous and denigrating – rather than constructive and democracy-building – content is incentivized by receiving more likes and shares (Kim et al. 2021; Rathje et al. 2021). On the one hand, exposure to such content makes the average person refrain from political conversations online (Barnidge et al. 2019) and, on the other hand, it further intensifies existing societal prejudice (Soral et al. 2018). As more generations become digital natives, more people will experience online hate and we risk losing any remaining potential of social media as a place for political participation and respectful debate – unless we find ways to curb it.

The need for an intervention map in a transdisciplinary field

Efforts to counteract hate speech and to strengthen a democratic, constructive, and inclusive discourse in the digital space are thriving – in various fields and across different actors and countries. These include legal and educational measures to prevent its onset, communicative and algorithmic interventions that counter or curb online hatred, initiatives that seek to help victims of hate speech, research programs that monitor and study its prevalence, and lastly, organizations and groups that connect all of these initiatives to collaborative networks against online hate speech. For example, the network for civil society groups against hate speech in Germany, Das NETTZ, counts 138 actors and interventions against online hate speech (Link, 29 August 2023). Furthermore, research on hate speech has grown exponentially in recent years from less than 30 to now more than 300 annual publications (Tontodimamma et al., 2021). With hate speech as a new item on the political agenda and the planned enactment of the DSA, the quantity and breadth of interventions will likely increase.

However, a comprehensive overview of existing measures against hate speech is lacking. Hate speech is of interest to transdisciplinary actors – people from civil society, legal studies, computer science, communication science, psychology, linguistics, etc. – but this also poses a risk for fragmentation with little exchange between disciplines and each actor speaking their own specific language (e.g., consider that little consensus exists on how to define hate speech). Furthermore, without a coherent framework, exponential growths in ideas and innovations can lead to competition and actually hamper progress (Chu & Evans, 2021). With regard to interventions against hate speech, we need a coherent framework or ‘roadmap’ in order to deal with an increasing number of interventions and prevent fragmentation and competition.

For this reason, we – Nadine Brömme and Hanna Gleiß from Das NETTZ, Prof. Dr. Tobias Rothmund, and us two – developed the Online Hate Speech Interventions Map. We hope that a categorization system – a taxonomy – developed via a collaboration between civil society and academia can reduce one another’s blind spots and be useful to both researchers and practitioners. When planning and developing new measures against hate speech, practitioners can use this map to situate their ‘product’ alongside others. Further, organizations may find this taxonomy useful to identify diverse sets of interventions and measures when planning networking events. In a landmark review of interventions against cyberhate, Blaya (2019) pointed to a lack of monitoring and evaluation of interventions. Therefore, researchers and NGOs can use this taxonomy as a basis to further quantify the availability and effectiveness of interventions (e.g., via evidence maps), thereby, identify if there is a special need for one or the other intervention type and if certain interventions could be more effective.

Development of categories

We developed the categories of our intervention map in an iterative process of (a) reviewing scientific literature on hate speech interventions and (b) identifying and classifying interventions that have been collected by Das NETTZ, our partnering civil society organization. In a literature review of hate speech interventions, we identified peer-reviewed journal articles (Álvarez-Benjumea & Winter, 2018; e.g., Bilewicz et al., 2021; Jaidka et al., 2019) and reviews (Blaya, 2019), as well as reviews by NGOs, civil society organizations, and other (governmental) institutions (Baldauf et al., 2015; Bundeszentrale für politische Bildung, 2022; Rudnicki K. & Steiger, 2020). This helped create a first draft of our map. We, then, re-evaluated and restructured our intervention map by classifying a set of existing hate speech interventions by civil society organizations and groups that collaborate with Das NETTZ. We discussed the categories in the team of researchers and practitioners in order to integrate research- and practice-orientated considerations. This iterative process of literature reviewing, classifying existing interventions, and engaging in discussions continued until we developed a categorization system that we thought could be conceptually and practically useful. Notably, we reviewed a limited set of literature and interventions by civil society groups and, therefore, do not claim to have covered all possible categories that exist. Importantly, we consider this map as a first draft that can hopefully inspire further expansions and alterations.

Explanation of categories

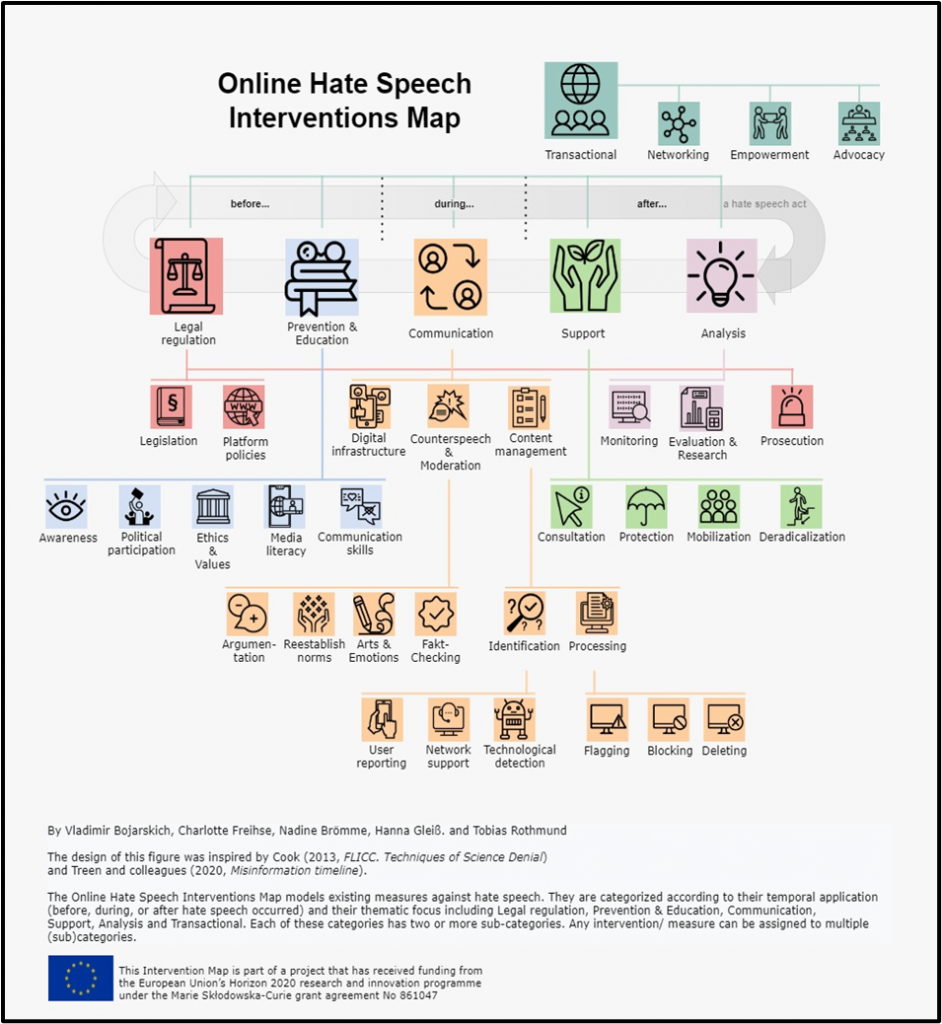

We established five higher categories to broadly categorize the interventions into:

- Legal Regulation that includes systemic interventions that prevent hate speech by means of legislation, network policies, and the prosecution of offenders.

- Prevention & Education that includes approaches that educate and teach skills to critically engage with online content, to identify hateful and/ or misleading content, and to engage constructively in debates.

- Communication which summarizes all interventions countering hate speech directly when and where it takes place, such as counter speech attempts and technological measures for limiting the spread of hate.

- Support that includes activities aimed to support victims of online hate speech. These can be carried out through enabling victims with information on what to do or provide active legal or social support.

- Analysis that concerns the study and evaluation of the phenomenon of hate speech and

- Transactional that has a cross-sectional function: interventions that encourage activities like exchange and networking among stakeholders as well as advocacy activities with regard to hate speech.

Our first draft

The first version of the Hate Speech Interventions Map displayed all intervention types in one static figure (Figure 1). This type of visualization, however, made it difficult to provide explanations and examples for each category.

Figure 1.

First draft of the Online Hate Speech Interventions Map.

The final version

Therefore, we opted for an interactive design. With the help of the media designers schultz+schultz, we designed an interactive version that provides explanations and examples for each category. The final Online Hate Speech Interventions Map can be found here.

A German version of the map can be found here.

How to read the map

Two things are important to understand the map. Firstly, the timeline in grey-ish color shows at what timepoints a given measure aims to intervene against hate speech (we got this idea from Treen and colleagues’ (2020) misinformation timeline). Before, during, or after a hate speech act. Note that the timeline is only meant as a practical and intuitive orientation. Some interventions take place at multiple times points (e.g., blocking and deleting hateful content can also prevent the proliferation of hate speech) and reinforce one another (e.g., political participation such as mass demonstrations can influence legislation).

Secondly, we adapted the FLICC(Cook, 2020) design, which provides a taxonomy of science skeptical techniques, to order and visualize a taxonomy of online hate speech interventions with distinctions into higher- and lower-order categories. For example, communication interventions can be considered a broader category including the subcategory counter speech & moderation which, in turn, includes the subcategory argumentation-focused interventions. Importantly, any measure or intervention can belong to more than one category. Our guess is that most interventions connect to 2-3 categories. For instance, counter speech initiatives such as Forum:neuland (formerly Reconquista Internet) usually target online social norms and mobilize people to speak out against hate speech: connecting the categories “reestablish norms” and “mobilization” (See Garland et al., 2022, for the effectiveness of this movement).

Challenging but beneficial dialogue between scientists and practitioners

The most important part of the process in the development was also the most challenging: making use of feedback cycles between science and civil society. Firstly, running a participative and agile exchange between both is time-consuming. Secondly, it requires openness from both sides so that both parties understand one another’s perspectives and terminology. And this can be tricky because science and practice do not only have different approaches – theory-driven vs. practice-oriented – but also different objectives towards the map: Das NETTZ was aiming for a map to represent and categorize all existing interventions so that it could potentially help people to find a suitable intervention. It was important for the scientists among us to develop a map to better understand the phenomenon of hate speech and identify where, when and why interventions against hate speech work. Consequently, there were not only different approaches to the process but also varying goals for the map. Eventually, our science-practice collaboration produced a map that we think is more comprehensive and practical than a solo-science or solo-practice approach would have produced.

Outlook

This taxonomy serves as the first comprehensive and structured overview of existing interventions across different timepoints (before, during, and after occurrences of hate speech) and at different levels of specificity (e.g., flagging of content vs. general content regulation). Importantly, we hope that this work can inspire scientists and practitioners in two ways. Firstly, expansions and alterations. While we put great effort in the comprehensiveness of our categorization system, it is also likely that we missed some intervention types or that future interventions need to be included. Note that the FLICC taxonomy of science denial techniques (which we based our map design on) started out with five and over the years expanded to thirty categories. Secondly, we hope that our science-practice collaboration can inspire other projects to take this step, especially since hate speech and its mitigation is multifaceted and thus requires transdisciplinary approaches.

Finally, if you are working on what causes hate speech or on hate speech interventions, you can expect to hear more from us. Pooling interdisciplinary expertise of researchers across Europe, the Network of Excellence for Training on Hate (NETHATE) funded by the European Union’s Horizon 2020 program, aims to advance what we know about hate speech and its mitigation. As part of NETHATE, Vladimir Bojarskich and Prof. Tobias Rothmund study the role of political ideology in people’s online expressions of hate and will provide new insights into ideology-driven hate speech and mitigation strategies. In Germany, Das NETTZ recently launched a network of excellence against online hate together with HateAid, Jugendschutz.net, and Neue deutsche Medienmacher*innen, thereby, combining a host of networking, legal, educational and journalistic expertise in Germany. Charlotte Freihse is a project manager in the Bertelsmann Stiftung’s Upgrade Democracy project, where she focuses primarily on platform governance, disinformation, and hate speech, as well as the impact of new technologies on public opinion-forming and discourse.

References

Álvarez-Benjumea, A., & Winter, F. (2018). Normative Change and Culture of Hate: An Experiment in Online Environments. European Sociological Review, 34(3), 223–237. https://doi.org/10.1093/esr/jcy005

Baldauf, J., Banaszczuk, Y., Koreng, A., Schramm, J., & Stefanowitsch, A. (2015). Geh sterben! Umgang mit Hate Speech und Kommentaren im Internet. Amadeu Antonio Stiftung. https://www.amadeu-antonio-stiftung.de/publikationen/geh-sterben/

Barnidge, M., Kim, B., Sherrill, L. A., Luknar, Ž., & Zhang, J. (2019). Perceived exposure to and avoidance of hate speech in various communication settings. Telematics and Informatics, 44, 101263. https://doi.org/10.1016/j.tele.2019.101263

Bilewicz, M., Tempska, P., Leliwa, G., Dowgiałło, M., Tańska, M., Urbaniak, R., & Wroczyński, M. (2021). Artificial intelligence against hate: Intervention reducing verbal aggression in the social network environment. Aggressive Behavior, 47(3), 260–266. https://doi.org/10.1002/ab.21948

Blaya, C. (2019). Cyberhate: A review and content analysis of intervention strategies. Aggression and Violent Behavior, 45, 163–172. https://doi.org/10.1016/j.avb.2018.05.006

Bundeszentrale für politische Bildung. (2022, August 17). Strategien gegen Hate Speech. bpb.de; Bundeszentrale für politische Bildung. https://www.bpb.de/252408/strategien-gegen-hate-speech

Chu, J. S. G., & Evans, J. A. (2021). Slowed canonical progress in large fields of science. Proceedings of the National Academy of Sciences of the United States of America, 118(41). https://doi.org/10.1073/pnas.2021636118

Cook, J. (2020, March 31). A history of FLICC: the 5 techniques of science denial. Skeptical Science. https://skepticalscience.com/history-FLICC-5-techniques-science-denial.html

Geschke, D., Klaßen, A., Quent, M., & Richter, C. (2019). #Hass Im Netz: Der schleichende Angriff auf unsere Demokratie. Institute for Democracy and Civil Society. https://www.idz-jena.de/fileadmin/user_upload/_Hass_im_Netz_-_Der_schleichende_Angriff.pdf

Hall, N. (2013). Hate Crime. Routledge.

Jaidka, K., Zhou, A., & Lelkes, Y. (2019). Brevity is the Soul of Twitter: The Constraint Affordance and Political Discussion. The Journal of Communication, 69(4), 345–372. https://doi.org/10.1093/joc/jqz023

Kim, J. W., Guess, A., Nyhan, B., & Reifler, J. (2021). The Distorting Prism of Social Media: How Self-Selection and Exposure to Incivility Fuel Online Comment Toxicity. The Journal of Communication, 71(6), 922–946. https://doi.org/10.1093/joc/jqab034

Lorenz-Spreen, P., Oswald, L., Lewandowsky, S., & Hertwig, R. (2023). A systematic review of worldwide causal and correlational evidence on digital media and democracy. Nat Hum Behav, 7(1), 74-101. https://doi.org/10.1038/s41562-022-01460-1

Pew Research Center. (2021). The State of Online Harassment. https://www.pewresearch.org/internet/2021/01/13/the-state-of-online-harassment/

Rathje, S., Van Bavel, J. J., & van der Linden, S. (2021). Out-group animosity drives engagement on social media. Proceedings of the National Academy of Sciences of the United States of America, 118(26). https://doi.org/10.1073/pnas.2024292118

Reichelmann, A., Hawdon, J., Costello, M., Ryan, J., Blaya, C., Llorent, V., Oksanen, A., Räsänen, P., & Zych, I. (2021). Hate Knows No Boundaries: Online Hate in Six Nations. Deviant Behavior, 42(9), 1100–1111. https://doi.org/10.1080/01639625.2020.1722337

Rudnicki K., & Steiger, S. (2020). Online Hate Speech: Introduction into motivational causes, effects and regulatory contexts. DeTACT. https://www.media-diversity.org/wp-content/uploads/2020/09/DeTact_Online-Hate-Speech.pdf

Soral, W., Bilewicz, M., & Winiewski, M. (2018). Exposure to hate speech increases prejudice through desensitization. Aggressive Behavior, 44(2), 136–146. https://doi.org/10.1002/ab.21737

Tontodimamma, A., Nissi, E., Sarra, A., & Fontanella, L. (2021). Thirty years of research into hate speech: topics of interest and their evolution. Scientometrics, 126(1), 157–179. https://doi.org/10.1007/s11192-020-03737-6

Treen, K. M. D., Williams, H. T. P., & O’Neill, S. J. (2020). Online misinformation about climate change. WIREs Climate Change, 11(5), e665. https://doi.org/10.1002/wcc.665

Wypych, M., & Bilewicz, M. (2022). Psychological toll of hate speech: The role of acculturation stress in the effects of exposure to ethnic slurs on mental health among Ukrainian immigrants in Poland. Cultural Diversity and Ethnic Minority Psychology. Advance online publication. https://doi.org/10.1037/cdp0000522

I’m glad you included “support” on the flowchart. When you’ve been accused of hatespeak, it can feel like the whole world is against you. Having support is crucial.